Adaptive hardware

Context-aware interfaces

What concept could interest the Microsoft Applied Sciences Group for a decade? Adaptive hardware: input devices that can change visually, and even potentially physically, based upon the relevant context.

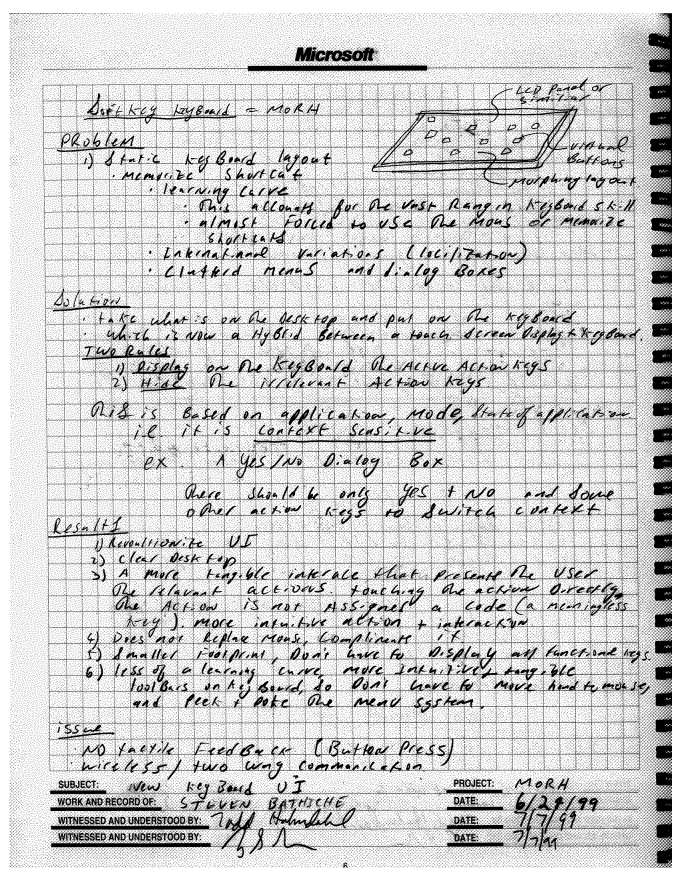

1999: The concept is born. A keyboard could be made that displays the active action keys and hides the irrelevant keys for a given application, application mode, and application state.

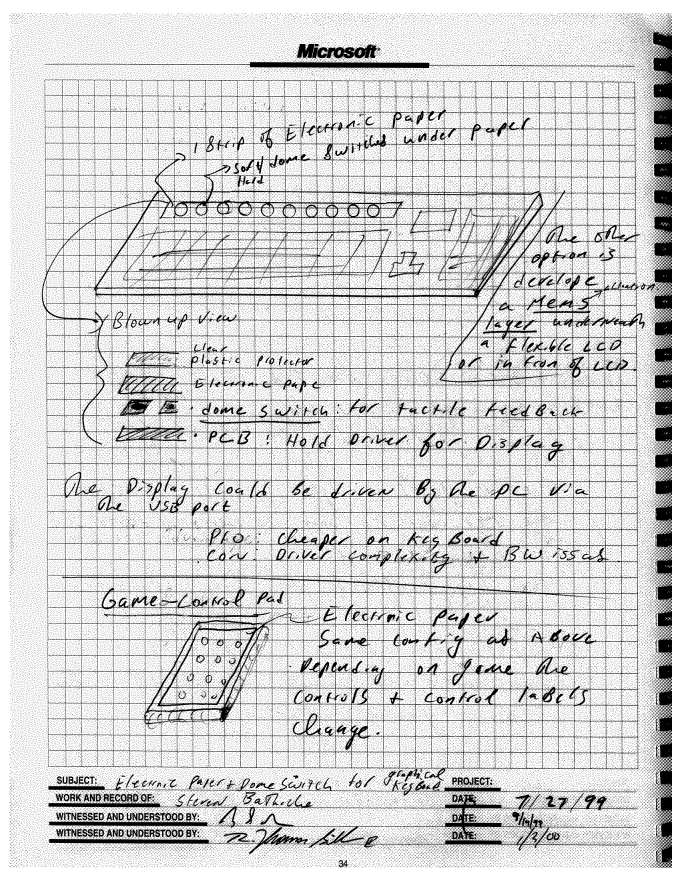

2000: An inexpensive solution was investigated. A layered electroluminescent display was made with pre-printed legends that changed depending upon which application currently had focus. Steven Bathiche, 2000:

2005: This project investigated getting the key’s image to appear on the top of the key rather than forcing the user to look down into the key. It used near collimated light from an LED and a mask (which could be an LCD or any other transparent spatial light modulator) to transfer the image from the mask all the way to the tops of the keys. Steven Bathiche, 2005:

2007: A much richer interactive experience was explored. Here, a camera and projector were placed above the workspace and were programmed to recognize a physical keyboard and project adaptable legends onto the keyboard depending upon the context. Because this means that during use the image meant for the keyboard would display on the backs of the user’s hands, the hands were identified and masked from the keyboard image. The same system also used the area around the keyboard to create an interactive touch surface. Steven Bathiche and Andy Wilson, 2007:

2008: A couple more approaches were explored. In one, an image was projected while its reflection was captured by a photodiode near the projector, similar to a bar code scanner. When the image was projected underneath the keys of a keyboard, it could be used to detect when a key was depressed by the light scattering back. Jonathan Westhues, Randy Crane, Amar Vattakandy, John Lewis, Steven Bathiche, 2008:

In this next video, you can see the projector and the camera working together. Note that by putting the photodiode’s signal through a high- pass filter, the system was made resilient to ambient light noise.

In another prototype, we enabled users to gesture on top of a mechanical keyboard with legends that could contextually adapt. This project explored various gesture interactions on top and around such a device, a montage of which is seen in the following video. To build the prototype, we designed keycaps that moved the key mechanism to the sides. We then removed the diffuser from a Microsoft Surface, and placed diffusers on the top of each key. A transparent sheet was used for support of the keyboard and key depression contact feedback. With this setup, the projector from a Microsoft Surface projects up through the keys giving an image on the key tops where the diffusers are. This also allowed us to project and see around the keyboard for gesture interactions that would be complimentary to the key top interaction. Ben Eidelson, Meng Li, Glen Larson, 2008:

2009: More recently, the research team investigated how touch experiences might be effectively integrated with keyboard input. This prototype has a large, touch-sensitive display strip at the top with the display continuing underneath the keys. In addition, a software infrastructure was developer to quickly enable students participating in the 2010 Student Innovation Contest at the ACM Symposium on User Interface Software and Technology to create their own on-device experiences. Research Team, 2009:

Applied Sciences

Applied Sciences