Creating a Metadata-Driven Processing Framework For Azure Data Factory

This open source code project delivers a simple metadata driven processing framework for Azure Data Factory and/or Azure Synapse Analytics (Intergate Pipelines). The framework is made possible by coupling the orchestration service with a SQL Database that houses execution batches, execution stages and pipeline metadata that is later called using an Azure Functions App. The execution batch, stage and worker pipeline structures allow; concurrent overall batch handling with inner stages of process dependencies executed in sequence. Then at the lowest level, all worker pipelines within a stage to be executed in parallel offering scaled out control flows where no inter-dependencies exist.

The framework is designed to integrate with any existing set of modular processing pipelines by making the lowest level executor a stand alone worker pipeline that is wrapped in a higher level of controlled (sequential) dependencies. This level of abstraction means operationally nothing about the monitoring of the orchestration processes is hidden in multiple levels of dynamic activity calls. Instead, everything from the processing pipeline doing the work (the Worker) can be inspected using out-of-the-box Azure monitoring features.

This framework can also be used in any Azure Tenant and allows the creation of complex control flows across multiple orchestration resources and even across Azure Tenant/Subscriptions by connecting Service Principal details through metadata to targeted Tenants > Subscriptions > Resource Groups > Orchestration Services and Pipelines, this offers granular administration over any data processing components in a given environment from a single point of easily controlled metadata.

Why use procfwk?

To answer the question of why use a metadata driven framework, please see the following YouTube video:

Framework Capabilities

Below is a simplified list of profrwk’s capabilities – be sure to check out the framework’s contents page for a full explanation of each capability and how they can be used:

- Interchangeable orchestration services.

- Azure Data Factory

- Azure Synapse Analytics (Intergate Pipelines)

- Granular metadata control.

- Metadata integrity checking.

- Global properties.

- Complete pipeline dependency chains.

- Batch executions (hourly/daily/monthly).

- Execution restart-ability.

- Parallel pipeline execution.

- Full execution and error logs.

- Operational dashboards.

- Low cost orchestration.

- Disconnection between framework and Worker pipelines.

- Cross Tenant/Subscription/orchestrator control flows.

- Pipeline parameter support.

- Simple troubleshooting.

- Easy deployment.

- Email alerting.

- Automated testing.

- Azure Key Vault integration.

- Is pipeline already running checks.

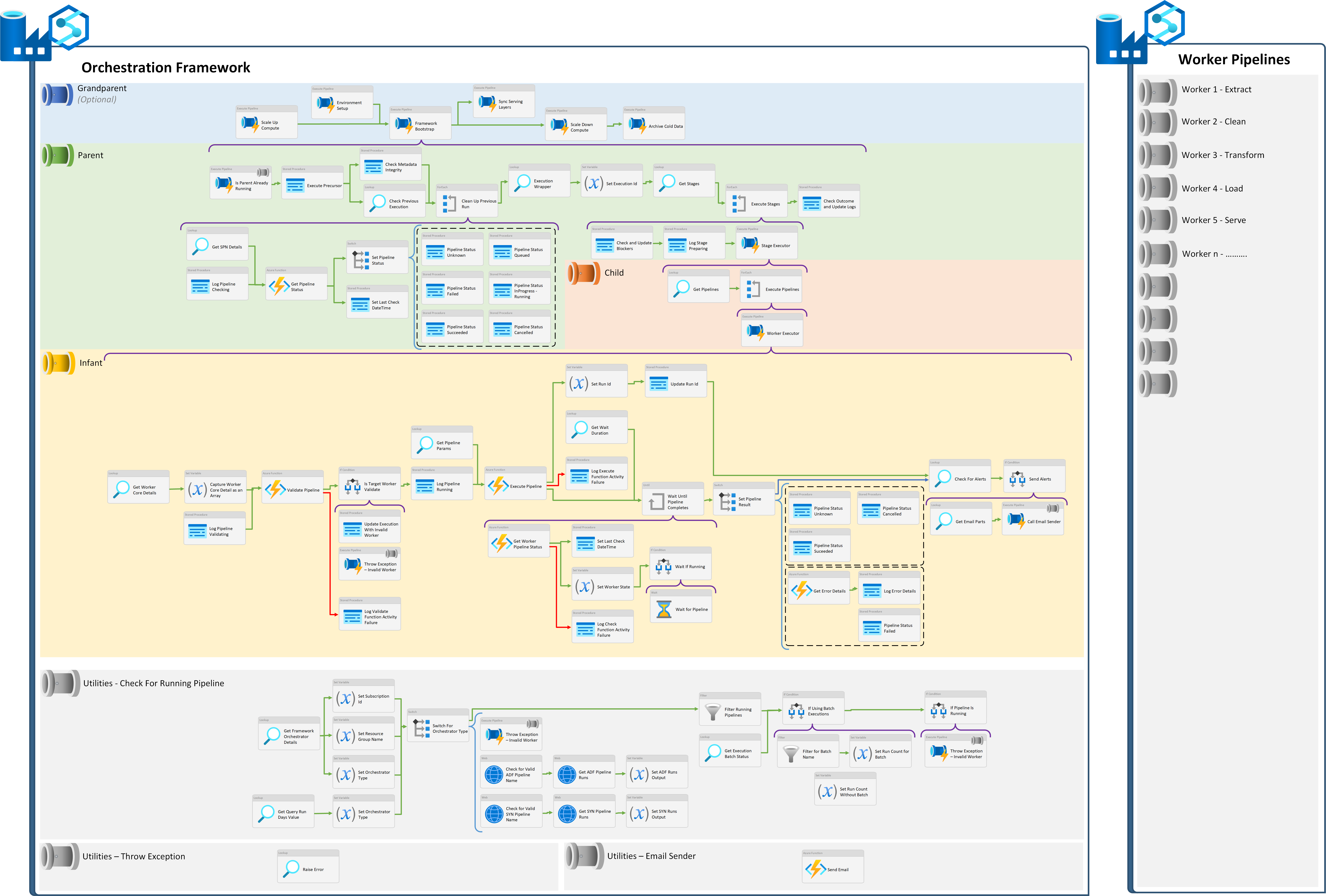

Complete Orchestrator Activity Chain

The following offers a view of all pipeline activities at every level within the processing framework if flattened out onto a single canvas. This applies regardless of the orchestrator type.

How to Deploy

For details on how to deploy the processing framework to your Azure Tenant see Deploying ProcFwk, which covers the basic steps you’ll need to take. Currently these steps assume a new deployment is being done, rather than an upgrade from a previous version of the framework. In addition, a reasonable working knowledge of using the Microsoft Azure platform is assumed when completing these action points.